I PASSED!

Getting Started

AWS Regions

- AWS has regions all aroung the world

- Names can be us-east-1, eu-west-3…

- A region is a cluster of data centers

- Most AWS services are regoin-scoped

How to choose an AWS Region?

- Compliance: with data governance and legal requirements, data never leaves a region without your explicit permission

- Proximity to customers: reduced latency

- Available services within a region: new services and new features aren’t available in every region

- Pricing: pricing varies region to region and is transparent in the service pricing page

AWS Availability Zones

- Each region has many AZs example:

- ap-southeast-2a

- ap-southeast-2b

- ap-southeast-2c

- Each AZ is one or more discrete data centers with redundant power, networking and connectivity

- they are separate from each other, so that they are isolated from disasters

- they are connected with high bandwidth, ultra low latency networking

AWS Points of Presence (Edge locations)

- Content is delivered to end users with lower latency

IAM and AWS CLI

IAM

- Identity and Access Management, Global service

- Root Account: created by default, shouldn’t be used or shared

- Users are people within your organization, and can be grouped

- Groups only contain users, not other groups

- Users don’t have to belong to a group, and user can belong to multiple groups

IAM Permissions

- Users or Groups can be assigned JSON documents called policies

- These policies define the permissions of the users

- In AWS you apply the least privilege principle, don’t give more permissions than a user needs

IAM Policies Structure

- Consist of

- Version: policy language version, always include ‘2012-10-17’

- Id: an identifier for the policy (optional)

- Statement: one or more individual statements (required)

- Statements consists of

- Sid: an identifier for the statement (optional)

- Effect: whether the statement allows or denies access (Allow, Deny)

- Principal: account/user/role to which this policy applied to

- Action: list of actions this policy allows or denies

- Resource: list of resources to which the actions applied to

- Condition: conditions for when this policy is in effect (optional)

How can users access AWS?

- AWS Management Console: protected by password + MFA

- AWS Command Line Interface: protected by access keys

- AWS SDK: for code, protected by access keys

- Access key ID = username

- Secret access key = password

IAM Roles for services

- Some AWS service will need to perform actions on your behalf

- we will assign permissions to AWS services with IAM Roles

- Common roles:

- EC2 instance roles

- lambda function roles

- roles for CloudFormation

IAM Security Tools

- IAM credentials report (account-level)

- a report that lists all your account’s users and the status of their various credentials

- IAM Access Advisor (user-level)

- Access Advisor shows the service permissions granted to a user and when those services were last accessed

- you can use this information to revise your policies

IAM Guidelines and Best practices

- Don’t user root account except for AWS account setup

- One physical user = one AWS user

- assign users to groups and assign permissions to groups

- create a strong password policy

- use and enforce the use of MFA

- create and use roles for giving permissions to AWS services

- use access keys for CLI and SDK

- Audit permissions of your account with the IAM Credential Report

- Never share IAM users and access keys

EC2

- EC2 is one of the most popular of AWS offering

- EC2 = Elastic Compute Cloud = Infrastructure as a Service

- It mainly consists in the capability of

- Renting virtual machines (EC2)

- Storing data on virtual drives (EBS)

- Distributing load across machines (ELB)

- Scaling the services using an auto-scaling group (ASG)

- Knowing EC2 is fundamental to understand how to Cloud works

EC2 Instance Types - Overview

- you can use different types of EC2 instances that are optimised for different use cases

- e.g. m5.2xlarge

- m: instance class

- 5: generation

- 2xlarge: size within the instance class

General Purpose

- Great for a diversity of workloads such as web servers or code repositories

- Balance between

- Compute

- Memory

- Networking

Compute Optimized

- Great for compute intensive tasks that require high performance processors

- Batch processing workloads

- media transcoding

- high performance web servers

- high performance computing

- scientific modeling and machine learning

- dedicated gaming servers

Memory Optimized

- Fast performance for workloads that process large data sets in memory

- high performance, relational/non-relational databases

- distributed web scale cache stores

- in memory databases optimized for BI

- applications performing real time processing of big unstructured data

Storage Optimized

- great for storage intensive tasks that require high, sequential read and write access to large data sets on local storage

- high frequency online transaction processing systems

- relational and NoSQL databases

- cache for in memory databases

- data warehousing applications

- distributed file systems

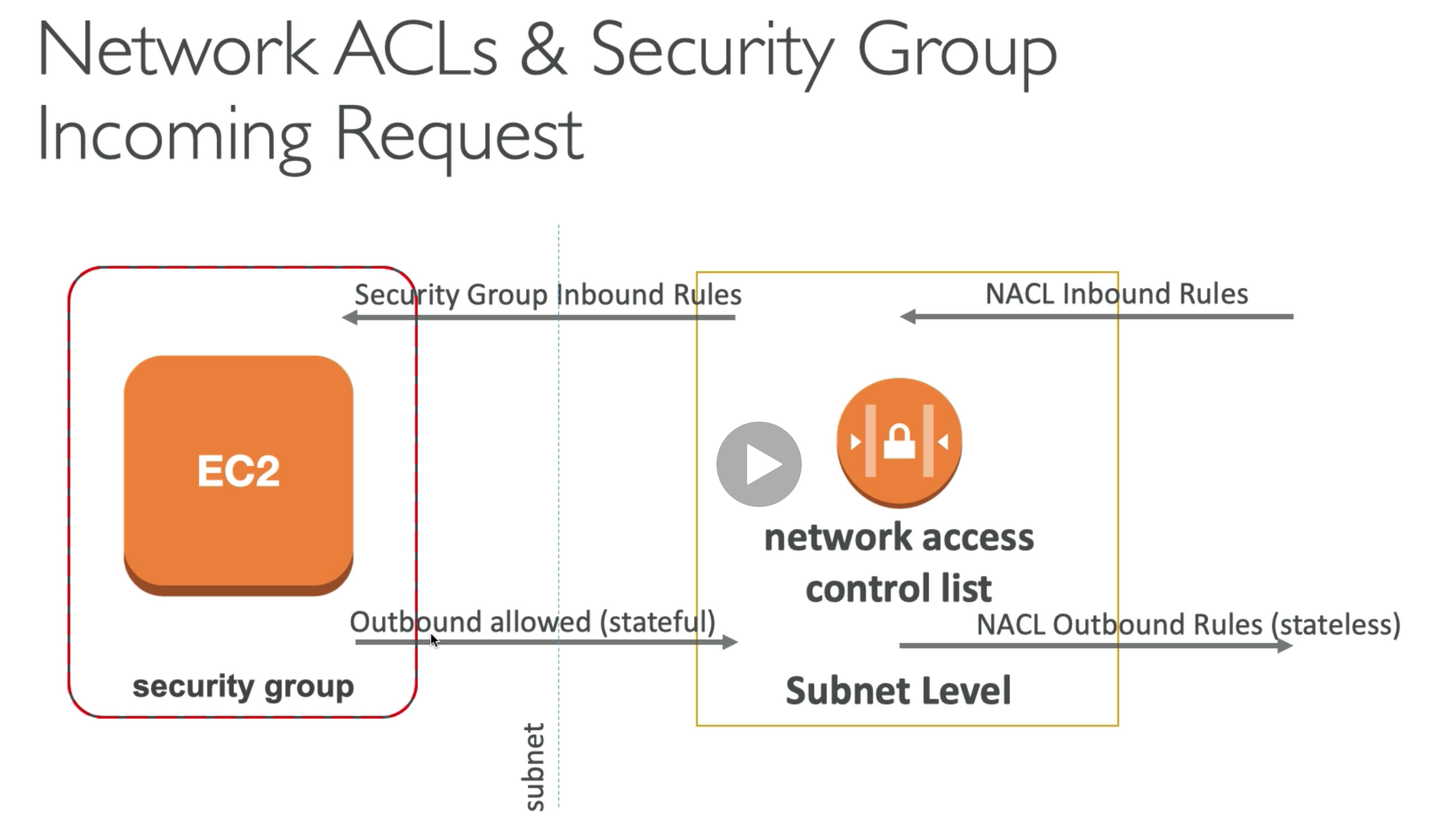

Security Groups

- the fundamental of network security in AWS

- they control how traffic is allowed into or out of our EC2 instance

- security groups only contain allow rules

- security groups rules can reference by IP or by security group (inbound/outbound rules)

Good to know

- security groups can be attached to multiple instances and one instance can have multiple security groups attach to it

- security group are locked down to a region and VPC

- security group live outside the EC2, if traffic is blocked, the EC2 instacne won’t see it (doesn’t know it tried to get in)

- if your application is not accessible (time out), then its a security group issue

- if your application gives a connection failed error, then its an application error or its not launched

- all inbound traffic is blocked by default

- all outbound traffic is authorized by default

Classic Ports to know

- 22 = SSH (Secure Shell) - log into a Linux instance

- 21 = FTP (File Transfer Protocol) - upload files into a file share

- 22 = SFTP (Secure File Transfer Protocol) - upload files using SSH

- 80 = HTTP - access unsecured websites

- 443 = HTTPS - access secured websites

- 3389 = RDP (Remote Desktop Protocol) - log into a Windows instance

EC2 Instances purchasing options

- on-demand instance: short workload, predictable pricing

- reserved, minimum 1 year:

- reserved instances: long workloads

- convertible reserved instances: long workloads with flexible instances

- scheduled reserved instances: example - every Thursday between 3 and 6 pm

- Spot instance: short workloads, cheap and can lose instances (less reliable)

- dedicated hosts: book an entire physical server, control instance placement

EC2 on demand

-

pay for what you use

- linux - billing per second, after the first minute

- all other os - billing per hour

-

has the highest cost but no upfront payment

-

no long-term commitment

-

recommended for short term and un-interrupted workloads, where you can’t predict how the application will behave.

EC2 reserved instances

- up to 75% discount compared to on demand

- reservation period: 1 year or 3 year2

- purchasing options: no upfront / partial upfront / all upfront

- reserve a specific instance type

- recommended for steady state usage applications (think database)

Convertible reserved instance

- can change the EC2 instance type

- up to 54% discount

scheduled reserved instances

- launch within time window you reserve

- when you require a fraction of day / week / month

- still commitment over 1 to 3 years

EC2 Spot instance

-

can get a discount of up to 90% compared to on demand

-

instances that you can lose at any point of time if your max price is less than the current spot price

-

the MOST cost efficient instances in AWS

-

useful for workloads that are resilient to failure

-

not suitable for critical jobs or databases

Spot instance requests

- define max spot price and get the instance while current spot price < max

- the hourly spot price varies based on offer and capacity

- if the current spot price > your max price you can choose to stop or terminate your instance with a 2 minutes grace period

- other strategy: spot block

- block spot instance during a specified time frame (1 to 6 hours) without interruptions

- in rare situations, the instance may be reclaimed

- cancel the spot instance request before terminate the spot instances

Spot fleets

- spot fleets = set of spot instances + (optional) on demand instances

- the spot fleet will try to meet the target capacity with price constraints

- define possible launch pools: instance type, OS, AZ

- can have multiple launch pools, so that the fleet can choose

- spot fleet stops launching instnaces when reaching capacity or max cost

- strategies to allocate spot instances

- lowest price: from the pool with the lowest price (cost optimization, short workload)

- diversified: distributed across all pools (great for availability, long workloads)

- capacity optimized: pool with the optimal capacity for the number of instances

- spot fleets allow us to automatically request spot instance with the lowest price

EC2 dedicated hosts

-

an Amazon EC2 dedicated host is a physical server with EC2 instance capacity fully dedicated to your use, dedicated hosts can help you address compliance requirements and reduce costs by allowing you to use your existing server-bound software licenses

-

allocated for your account for a 3 year period reservation

-

more expensive

-

useful for software that have complicated licensing model (BYOL - bring your own license)

-

or for companies that have strong regulatory or compliance needs

Elastic IP

-

when you stop and then start an EC2 instance, it can change its public IP

-

if you need to have a fixed public IP for your instance, you need an Elastic IP

-

an Elastic IP is a public IPv4 IP you own as long as you don’t delete it

-

you can attach it to one instance at a time

-

with an Elastic IP, you can mask the failure of an instance or software by rapidly remapping the address to another instance in your account (not common)

-

you can only have 5 Elastic IP in your account

-

Overall, try to avoid using Elastic IP

- they often reflect poor architectrual decisions

- instead, use a random public IP and register a DNS name to it

- use a load balancer and don’t use a public IP

Placement Groups

- Sometimes you want control over the EC2 instance placement strategy

- when you create a placement group, you specify one of the following strategies for the group

- Cluster: clusters instances into a low latency group in a single AZ

- Spread: spreads instances across underlying hardware (max 7 instances per group per AZ) - critical applications

- partition: spreads instances across many different partitions (which rely on different sets of racks) within an AZ, Scales to 100 of EC2 instances per group (Hadoop, Cassandra, Kafka), the instances in a partition do not share racks with the instances in the other partitions, a partition failure can affect many EC2 but won’t affect other partitions

Elastic Network Interfaces (ENI)

- logical component in a VPC that represents a virtual network card

- the ENI can have the following attributes

- Primary private IPv4, one or more secondary IPv4

- One Elastic IP per private IPv4

- one public IPv4

- one or more security groups

- a MAC address

- you can create ENI independently and attach them on the fly on EC2 instances for failover

- bound to a specific AZ

EC2 Hibernate

-

Stop: the data on disk (EBS) is kept intact in the next start

-

Terminate: any EBS volums (root) also setup to be destroyed is lost

-

First start: the OS boots and the EC2 user data script is run

-

Following starts: the OS boots up

-

the your application starts, caches get warmed up and that can take time

-

Introducing EC2 Hibernate

- RAM state is preserved

- the instance boot is much faster (the OS is not stopped / restarted)

- under the hood: the RAM state is written to a file in the root EBS volume

- the root EBS volume must be encrypted

-

Use cases

- long running process

- saving the RAM state

- servcies that take time to initialize

EC2 Nitro

- underlying platform for the next generation of EC2 instances

- new virtualization technology

- allows for better performance

- better networking options

- Higher Speed EBS

- better underlying security

EC2 vCPU

- EC2 instance comes with a combination of RAM and vCPU

- in some cases, you may want to change the vCPU options

- change the number of CPU cores

- chagne the number of vCPUs (threads) per core

- only specified during instance launch

EC2 capacity reservations

- ensure you have EC2 capacity when needed

- manual or planned end date for the reservation

- no need for 1 or 3 year commitment

- capacity access is immediate, you get billed as soon as it starts

- combine with reserved instances and savings plans to do cost saving

EC2 EBS

- an EBS volume is a network drive you can attach to your instances while they run

- it allows your instances to persist data, even after their termination

- they can only be mounted to one instance at a time (in some cases, some EBS can be attached to multiple EC2 instances at same time)

- they are bound to a specific AZ

- think of them as a network USB stick

EBS volume

- its a network drive

- it uses the network to communicate the instance, which means there might be a bit of latency

- it can be detached from an EC2 instance and attached to another one quickly

- its locked down to an AZ

- an EBS volume in us-east-1a cannot be attached to an instance in us-east-1b

- to move a volume across, you first need to snapshot it

- have a provisioned capacity

- you get billed for all the provisioned capacity

- you can increase the capacity of the drive over time

EBS volume types

- gp2 / gp3: general purpose SSD

- gp3: newer generation, IOPS and throughput are independet

- gp2: IOPS and throughput are linked

- io1 / io2: highest performance SSD

- st1: low cost HDD, for throughput intensive workloads

- sc1: lowest cost HDD

- EBS volumes are characterized in Size / Throughput / IOPS

EBS multi-attach - io1/io2 family

- attach the same EBS volume to multiple EC2 instances in the same AZ

- each instance has full read and write permissions to the volume

- applications must manage concurrent write operations

- must use a file system thta’s cluster-aware

EBS encryption

- when you create an encrypted EBS volume, you get the following

- data at rest is encrypted inside the volume

- all the data in flight mobing between the instance and the volume is encrypted

- all snapshots are encrypted

- all volumes created from the snapshot are encryped

- encryption and decryption are handled transparently

- encryption has a minimal impact on latency

- EBS encryption leverages keys from KMS (AES-256)

- copying an unencrypted snapshot allows encryption

EBS RAID

RAID 0

- increase performance

- combining 2 or more volumes and getting the total disk space and I/O

- but if one disk fails, all the data is failed

- using this, we can have a very big disk with a lot of IOPS

RAID 1

- increase fault tolerance

- mirroring a volume to another

- we have to send the data to two EBS volume at the same time (2 * network)

EBS delete on termination

- controls the EBS behaviour when an EC2 instance terminates

- by default, the root EBS volume is delete (attribute enabled)

- by default, any other attached EBS volume is not deleted (attribute disabled)

- this can be controlled by the AWS console / CLI

- use case: preseve root volume when instance is terminated (disable the attribute)

EBS Snapshots

- make a backup (snapshot) of your EBS volume at a point of time

- not necessary to detach volume to do snapshot, but recommended

- can copy snapshots across AZ or Region

- can create volume from snapshot

EFS - Elastic File System

- Managed NFS (network file system) that can be mounted on many EC2

- EFS works with EC2 instances in multi-AZ

- highly avialble, scalable, expensive, pay per use

- uses security group to control access to EFS

- compatible with Linux based AMI (not Windows)

- encryption at rest using KMS

- to mount EFS to EC2, you need to add EC2 security group as a inbound rule in EFS security group

Performance and Storage Class

- Performance mode

- general purpose

- MAX I/O

- Throughput mode

- bursting

- provisioned

- Storage tiers

- standard

- infrequent access, cost to retrieve files, lower price to store

AMI Overview

- Amazon Machien Image

- a customization of an EC2 instance

- you add your own software, configuration, operating system etc…

- faster boot / configuration time because all your software is pre-packaged

- AMI are built for a specific region and can be copied across regions

- you can launch EC2 instance from

- public AMI: provided by AWS

- your own AMI: you make and maintain them yourself

- AWS marketplace AMI: an AMI someone else made

EC2 instance store

- EBS volumes are network drives with good but limited performance

- if you need a high performance hardware disk, use EC2 instance store

- better I/O performance

- EC2 instance store lose their storage if they are stopped (ephemeral)

- good for buffer / cache / scratch data / temporary content

- risk of data loss if hardware fails

- backups and replication are your responsibility

EC2 Metadata

- AWS EC2 instance metadata is powerful but one of the least known features to developers

- it allows EC2 instance to learn about themselves without using an IAM role for that purpose

- the URL is

http://169.254.169.254/latest/meta-data - you can retrieve the IAM role name from the metadata, but you CANNOT retrieve the IAM policy

Elastic Load Balancer

What is load balancing?

- load balancers are servers that forward internet traffic to multiple servers (EC2 instances) downstream

Why use a load balancer?

-

Spread load across multiple downstream instances

-

expose a single point of access (DNS) to your application

-

seamlessly handle failures of downstream instances

-

do regular health checks to your instances

-

provide SSL termination (HTTPS) for your websites

-

enforce stickness with cookies

-

high availability across zones

-

separate public traffic from private traffic

-

An ELB is a managed load balancer

- AWS guarantees that it will be working

- AWS takes care of upgrades, maintenance, high availability

- AWS provides only a few configuration knobs

-

it costs less to setup your own load balancer but it will be a lot more effort on your end

-

it is integrated with many AWS offering / services

Health Checks

- Health Checks are crucial for load balancers

- they enable the load balancer to know if instances it forwards traffic to are available to reply to requests

- the health check is done on a port and a route (/health is common)

- if the response is not 200, then the instance is unhealthy

Classic Load Balanceers (v1)

- supports TCP (layer 4), HTTP and HTTPS (layer 7)

- health checks are TCP or HTTP based

Application Load Balancer (v2)

- Application load balancer is layer 7 (HTTP)

- load balancing to multiple HTTP applications across machines (target groups)

- load balancing to multiple applications on the same machine (containers)

- support for HTTP/2 and WebSocket

- Support redirects (from HTTP to HTTPS)

- Routing tables to differnt target groups

- routing based on path in URL (example.com/users & example.com/posts)

- routing based on hostname in URL (one.example.com & other.example.com)

- routing based on query string, headers (example.com/users?id=123&other=false)

- ALB are a great fit for micro services and container based application (Docker and Amazon ECS)

- Has a port mapping feature to redirect to a dynamic port in ECS

- in comparison, we would need multiple CLB, one for each application

Target Groups

- EC2 instances can be managed by an Auto Scaling Group - HTTP

- ECS tasks (managed by ECS itself) - HTTP

- Lambda function - HTTP request is translated into a JSON event

- IP addresses - must be private IPs

- ALB can route to multiple target groups

- health checks are at the target group level

Network Load Balancer (v2)

- network load balancer (layer 4)

- forward TCP and UDP traffic to your instance

- handle millions of request per second

- less latency ~ 100 ms (vs 400 ms for ALB)

- NLB has one static IP per AZ, and supports assigning Elastic IP (helpful for whitelisting specific IP)

- NLB are used for extreme performance, TCP or UDP traffic

- Not included in AWS free tier

Sticky Sessions (Session Affinity)

-

it is possible to implement stickness so that the same client is always redirected to the same instance behind a load balancer

-

this works for CLB and ALB

-

the cookie used for stickness has an expiration date you control

-

use case: make sure the user doesn’t lost his session data

-

enabling stickness may bring imbalance to the load over the backend EC2 instances

-

Application based cookies

- custom cookie

- generated by the target

- can include any custom attributes required by the application

- cookie name must be specified individually for each target group

- don’t use AWSALB, AWSALBAPP, AWSALBTG (reserved for use by the ELB)

- application cookie

- generated by the load balancer

- cookie name is AWSALBAPP

- custom cookie

-

Duration based cookie

- cookie generated by the load balancer

- cookie name is AWSALB for ALB, AWSELB for CLB

Cross Zone Load Balancing

- each load balancer instance distribute evenly across all registered instances in all AZ

- ALB

- always on (can’t be disabled)

- no charges for inter AZ data

- NLB

- disabled by default

- you pay charges for inter AZ data if enabled

- CLB

- Through console => enabled by default

- through CLI / API => disabled by default

- no charges

SSL/TLS

-

an SSL certificate allows traffic between your clients and your load balancer to be encrypted in transit

-

SSL refers to Secure Sockets Layer, used to encrypt connections

-

TLS refers to Transport Layer Security, which is a newer version

-

TLS certificate are mainly used, but people still refer as SSL

-

public SSL certificates are issued by Certificate Authorities (CA)

-

SSL certificates have an expiration date and must be renewed

-

the load balancer uses an X.509 certificate (SSL/TLS server certificate)

-

you can manage certificates using ACM (AWS Certificate Manager)

-

You can create upload your own certificate

-

HTTPS listner

- you must specify a default certificate

- you can add an optional list of certs to support multiple domains

- clients can use SNI (Server Name Indication) to specify the host name they reach

- ability to specify a security policy to support older version of SSL/TLS

SSL - Server Name Indication

- SNI solves the problem of loading multiple SSL certificate onto one web server (to serve multiple website)

- its newer protocol, and requires the client to indicate the hostname of the target server in the initial SSL handshake

- the server will then find the correct certificate, or return the default one

- Only works for ALB and NLB, CloudFront

- doesn’t work for CLB

ELB Connection Draining

- Time to complete in-flight requests while the instance is de-registering or unhealthy

- stops sending new requests to the instance which is de-registering

- between 1 to 3600 seconds, default is 300 seconds

- can be disabled (set to zero)

- set to a low value if your requests are short

Auto Scaling Group

-

in real life, the load on your websites and application can change

-

in the cloud, you can create and get rid of servers very quickly

-

the goal of an Auto Scaling Group is to

- scale out to match an increased load

- scale in to match an decreased load

- ensure we have a minimum and maximum number of machines running

- automatically register new instances to a load balancer

ASG attributes

- A launch configuration

- AMI + instance type

- EC2 user data

- EBS volumes

- Security groups

- SSH key pair

- min size / max size / initial capacity

- network + subnets information

- load balancer information

- scaling policies

Auto Scaling Alarms

- it is possible to scale an ASG based on CloudWatch alarms

- an alarm monitors a metric (such as average CPU)

- metrics are computed for the overall ASG instances

Auto Scaling New Rules

- it is now possible to define better auto scaling rules that are directly managed by EC2

- target average CPU usage

- number of requests on the ELB per instance

- average network in

- average network out

- these rules are easier to setup and can make more sense

Auto Scaling Custom Metric

- send custom metric from application on EC2 to CloudWatch

- Create CloudWatch alarm to react to low / high values

- use the CloudWatch alarm as the scaling policy for ASG

Good to know

- scaling policies can be on CPU, network… and can even be on custom metrics or based on a schedule

- ASGs use launch configurations or launch templates

- to update an ASG, you must provide a new launch configuration / launch template

- IAM roles attached to an ASG will get assigned to EC2 instances

- ASG are free, you pay for the underlying resources being launched

- having instances under an ASG means that if they get terminated for whatever reason, the ASG will automatically create a new one as a replacement.

- ASG can terminate instances marked as unhealthy by an ELB (and then replace them)

Auto Scaling Groups - Dynamic Scaling Policies

- target tracking scaling

- most simple and easy to setup

- example: I want to average ASG CPU to stay at around 40%

- Simple / Step Scaling

- When a CloudWatch alarm is triggered (example: CPU > 70%), then add 2 units

- when a CloudWatch alarm is triggered (example: CPU < 30%), then remove 1 unit

- Scheduled Actions

- anticipate a scaling based on known usage patterns

- example: increase the min capacity to 10 at 5pm on Fridays

- predictive scaling

- continuously forecast load and schedule scaling ahead

Good metrics to scale on

- CPU Utilization

- average CPU utilization across your instances

- Request Count Per Target

- to make sure the number of requests per EC2 instances is stable

- Average network in / out

- if your application is network bound (heavy downloads / uploads)

- Any custom metric that you push using CloudWatch

Scaling Cooldowns

- After a scaling activity happens, you are in the cooldown period (default 300 seconds)

- during the cooldown period the ASG will not launch or terminate additional instances (to allow for metrics to stablize)

- advice: use a ready to use AMI to reduce configuration time in order to be serving request faster and reduce the cooldown period

ASG default termination policy

- Find the AZ which has the most number of instances

- if there are multiple instances in the AZ to choose from, delete the one with the oldest launch configuration

- ASG tries to balance the number of instances across AZ by default

ASG lifecycle hooks

- by default as soon as an instance is launched in an ASG its inservice

- you have the ability to perform extra steps before the instance goes in service (pending state)

- you have the ability to perform extra actions before the instance is terminated (terminating state), like extract logs, tools etc…

ASG launch template vs Launch configuration

- both

- ID of the AMI, the instance type, a key pair, security groups, and the other parameters that you use to launch EC2 instances

- Launch Configuration

- must be re-created every time

- launch template

- can have multiple versions

- create parameters subsets (partial configuration for re-use and inheritance)

- provision using both on demand and stop instances

- can use T2 unlimited burst feature

- recommended by AWS going forward

RDS

- RDS stands for relational database service

- its a managed DB service for DB use SQL as a query language

- it allows you to create databases in the cloud that are managed by AWS

- Postgres

- MySQL

- MariaDB

- Oracle

- Microsoft SQL Server

- Aurora (AWS Proprietary database)

Advantage over using RDS vs deploying DB on EC2

- RDS is a managed service

- automated provisioning, OS patching

- continuous backups and restore to specific timestamp (point in time restore)

- monitoring dashboards

- read replicas for improved read performance

- multi AZ setup for DR (Disaster Recovery)

- maintenance windows for upgrades

- scaling capability (vertical or horizontal)

- storage backed by EBS

- but you can’t SSH into your RDS instance (its managed by AWS)

RDS backups

- backups are automatically enabled in RDS

- automatically backups

- daily full backup of the database (during the maintenance windows)

- transaction logs are backed up by RDS every 5 minutes

- ability to restore to any point in time (from oldest backup to 5 minutes ago)

- 7 days retention (can be increased to 35 days)

- DB snapshots

- manually triggered by the user

- retention of backup for as long as you want

RDS storage auto scaling

- helps you increase storage on your RDS DB instance dynamically

- when RDS detects you are running out of free database storage, it scales automatically

- avoid manually scaling your database storage

- you have to set maximum storeage threshold (maximum limit for DB storage)

- automatically modify storage if

- free storage is less than 10% of allocated storage

- low storage lasts at least 5 minuts

- 6 hours have passed since last modification

- useful for applications with unpredicatable workloads

- supports all RDS database engines (MariaDB, MySQL, PostgreSQL, SQL server, Oracle)

Read Replicas for read scalability

- up to 5 read replicas

- within AZ

- cross AZ

- cross region

- replication is ASYNC, so reads are eventually consisent, possible to read old data

- replicas can be promoted to their own DB

- applications must update the connection string to leverage read replicas

Read replicas - use case

- you have a production database that is taking on normal load

- you want to run a reporting application to run some analytics

- you create a read replica to run the new workload there

- the production application is unaffected

- read replicas are used for SELECT only kind of operations (not DELETE, INSERT, UPDATE)

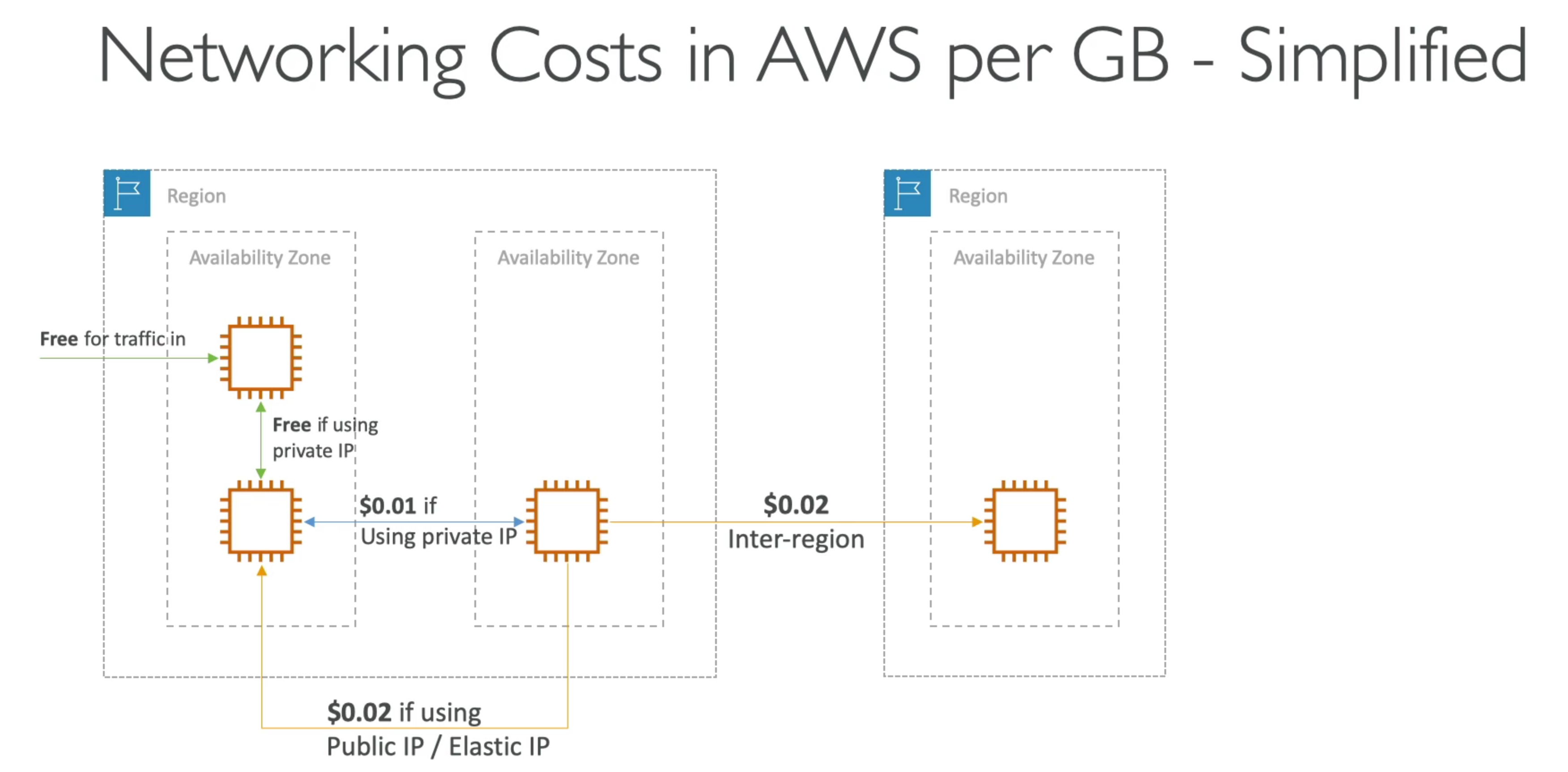

Network cost

- in AWS there is a network cost when data goes from one AZ to another

- for RDS read replicas within the same region, you don’t pay that fee

- for read replicas across regions, you need to pay

Multi AZ disaster recovery

- SYNC replication

- one DNS name - automatic app failaover to standby

- increase availability

- failover in case of loss of AZ, loss of network, instance or storage failure

- no manual intervention in apps

- not used for scaling (not handle traffic, only take over when master RDS fail)

- NOTE: the read replicas can be setup as Multi AZ for disaster recovery

RDS - from single AZ to multi AZ

- zero downtime operation (no need to stop the DB)

- just click on modify for the database

- the following happens internally

- a snapshot is taken

- a new DB is restored from the snapshot in a new AZ

- synchronization is established between the two databases

RDS security - encryption

- at rest

- possibility to encrypt the master and read replicas with AWS KMS - AES-256 encryption

- encryption has to be defined at launch time

- if the master is not encrypted, the read replica cannot be encrypted

- Transparent Data Encryption (TDE) available for Oracle and SQL server

- in flight encryption

- SSL certificate to encrypt data to RDS in flight

- provide SSL options with trust certificate when connecting to database

Encryption operations

- Encrypting RDS backups

- snapshots of un-encrypted RDS databases are un-encrypted

- snapshots of encrypted RDS database are encrypted

- can copy a snapshot into an encrypted one

- to encrypt an un-encrypted RDS database

- create a snapshot of the un-encrypted database

- copy the snapshot and enable encryption for the snapshot

- restore the database from the encrypted snapshot

- migrate applications to the new database and delete the old database

RDS security - network and IAM

- network security

- RDS database are usually deployed within a private subnet, not in a public one

- RDS security works by leveraging security groups (the same concept as for EC2 instances) - it controls which IP / security group can communicate with RDS

- access management

- IAM policies help control who can manage AWS RDS (through the RDS API)

- traditional username and password can be used to login into the database

- IAM based authentication can be used to login into RDS for MySQL and PostgreSQL

RDS - IAM authentication

- IAM database authentication works with MySQL and PostgreSQL

- you don’t need a password, just an authentication token obtained through IAM and RDS API calls

- auth token has a lifetime of 15 minutes

- benefits

- network in / out must be encrypted using SSL

- IAM to centainly manage users instead of DB

- can leverage IAM roles and EC2 instance profiles for easy integration

Amazon Aurora

- Aurora is a proprietary technology from AWS (not open sourced)

- Postgres and MySQL are both supported as Aurora DB (that means your drivers will work as if Aurora was a Postgres or MySQL database)

- Aurora is AWS cloud optimized and claims 5x performance improvement over MySQL on RDS, over 3x performance of Postgres on RDS

- Aurora storage automatically grows from 10 GB to 64 TB

- Aurora can have 15 replicas while MySQL has up to 5, and the replication process is faster

- failover in Aurora is instantaneous

- Aurora costs more then RDS (20% more), but is more efficient

Aurora High Availability and Read Scaling

- 6 copies of your data across 3 AZ

- 4 copies out of 6 needed for writes

- 3 copies out of 6 needed for reads

- self healing with peer to peer replication

- storage is striped across 100s of volumes

- one Aurora instance takes writes (master)

- automated failover for master in less than 30 seconds

- master + up to 15 Aurora read replicas serve reads

- support for cross region replication

Aurora - Custom Endpoints

- define a subset of Aurora instances as a custom endpoint

- example: run analytical queries on specific replicas

- the reader endpoint is generally not used after defining custom endpoints

Aurora serverless

- automated database instantiation and auto scaling based on actual usage

- good for infrequent intermittent or unpredictable workloads

- no capacity planning needed

- pay per second, can be more cost effective

Aurora Multi-Master

- in case you want immediate failover for write node (HA)

- every node does Read and write - vs - promoting a read replica as the new master (faster failover)

Amazon ElastiCache

- the same way RDS is to get managed relational databases

- elastiCache is to get managed Redis or Memcached

- caches are in memory databases with really high performance, low latency

- helps reduce load off databases for read intensive workloads

- helps make your application stateless

- AWS takes care of OS maintenance / patching, optimization, setup, configuration, monitoring, failure recovery and backups

- using ElastiCache involves heavy application code changes

DB Cache

- applications queries ElastiCache, if not available, get from RDS and store in ElastiCache

- htlps relieve load in RDS

- Cache must have an invalidation strategy to make sure only the most current data is used in there (LRU, LFU)

User session store

- user logs into any of the application

- the application wrties the session data into ElastiCache

- the user hits another instance of our application

- the instance retrieve the session data and the user is already logged in

Redis

- Multi AZ witi auto faliover

- read replicas to scale reads and have High Availability

- data durability using AOF persistence

- backup and restore features

Memcached

- multi-node for partitioning of data (sharding)

- no high availability (replication)

- non persistent

- no backup and restore

- multi-threaded architecture

Patterns for ElastiCache

- Lazy loading

- all the read data is cached, data can become stale in cache

- write through

- adds or update data in the cache when written to a DB (no stale data)

- session store:

- store temporary session data in a cache (using TTL features)

Reids use case

- Gaming leaderboard

- Redis Sorted sets guarantee both uniqueness and element ordering

- each time a new element added, its ranked in real time, then added in correct order

Route 53

- route 53 is a managed DNS (domain name system)

- DNS is a collection of rules and records which helps clients understand how to reach a server through its domain name

- in AWS, the most common records are

- A: hostname => IPv4

- AAAA: hostname => IPv6

- CNAME: hostname => hostname

- Alias: hostname => AWS resource

Overview

- route 53 can use

- public domain names you own

- private domain names that can be resolved by your instances in your VPCs

- Route 53 has advanced features such as

- load balancing (through DNS, also called client load balancing)

- health checks

- routing policy: simple, failover, geolocation, latency, weighted…

- you pay $0.5 per month per hosted zone

- user will first send DNS request (http://myapp.mydomain.com) to route 53 asking for IP address

- route 53 will response will the ip address of that DNS and a TTL

- user browser will then send the HTTP request to the correct IP to reach the server

- next time when the user send DNS request, if the last request is not expire (check the TTL), browser will directly go to the last saved IP address, save traffic for route 53

CNAME vs Alias

- AWS resource (load balancer, cloudfront…) expose an AWS hostname and you want myapp.mydomain.com

- CNAME

- points a hostname to any other hostname

- only for non root domain (something.mydomain.com)

- Alias

- points a hostname to an AWS resource

- works for root domain and non root domain (mydomain.com)

- free of charge

- native health check

Simple Routing policy

- use when you need to redirect to a single resource

- you can’t attach health checks to simple routing policy

- if multiple values (IP addresses) are returned, a random one is chosen by the client (client side load balancing)

Weighted routing policy

- control the percentage of the requests that go to specific endpoint

- helpful to test percentage of traffic on new app version for example

- helpful to split traffic between two regions

- can be associated with health checks

- but on the client side the browser is not aware that it has multiple weighted endpoints in the backend

Latency routing policy

- redirect to the server that has the least latency close to user

- super helpful when latency of users is a priority

- latency is evaluated in terms of user to designated AWS region

- Germany may be redirected to the US (if that’s the lowest latency)

Route 53 health checks

- have X health checks failed => unhealthy (default 3)

- have X health checks passed => healthy (default 3)

- default health checks interval : 30 seconds (can set to 10 seconds with higher cost)

- about 15 health checkers will the the endpoint health in the background (from different regions)

- one request every 2 seconds on average (30 / 15)

- can have HTTP, TCP, and HTTPS health checks (no SSL verification)

- possible to integrate health check with CloudWatch

- health checks can be linked to route 53 DNS queries

GeoLocation routing policy

- different from latency based

- this is routing based on user location

- here we specify traffic from the UK should go to this specific IP

- should create a default policy (in case there is no match on location)

Geoproximity routing policy

- route traffic to your resources based on the geographic location of users and resources

- ability to shift more traffic to resources based on the defined bias

- to change the size of the geographic region, specify bias values

- to expand (1 to 99) - more traffic to the resources

- to shrink (-1 to -99) - less traffic to the resources

- resources can be

- AWS resources (specify AWS region)

- non-AWS resources (specify latitude and longitude)

- you must use route 53 traffic flow (advanced) to use this feature

Multi value routing policy

- use when routing traffic to multiple resources

- want to associate a route 53 health checks with records

- up to 8 healthy records are returned for each multi value query

- multi value is not a substitute for having an ELB

- client browser will randomly choose a healthy record from returned records (client side fault tolerance)

Route 53 as a Registrar

-

a domain name registrar is an organization that manages the reservation of internet domain names

- GoDaddy

- Google Domains

-

Domain registrar != DNS

-

if you buy your domain on 3rd party website, you can still use route 53

- create a hosted zone in route 53

- update NS records on 3rd party website to use route 53 name servers (all 4 of them)

Classic Solutions Architecture

Stateful App with shopping cart

- ELB sticky sessions

- web clients for storing cookies and making our web app stateless

- ElastiCache

- for storing sessions (alternative: DynamoDB)

- for caching data from RDS

- Multi AZ

- RDS

- for storing user data

- read replicas for scaling reads

- multi AZ for disaster recovery

- tight security with security groups referencing each other

Instantiating Applications Quickly

- EC2 instances

- use a golden AMI: install your applications, OS dependencies, beforehand and launch your EC2 instance from the golden AMI

- bootstrap using user data: for dynamic configuration, use User Data scripts

- Hybrid: mix golden AMI and User Data (Elastic Beanstalk)

- RDS databases

- restore from a snapshot: the database will have schemas and data ready

- EBS volume:

- restore from a snapshot, the disk will already be formatted and have data

Beanstalk

Developer problems on AWS

-

managing infrastructure

-

deploying code

-

configuring all the databases, load balancers, etc…

-

scaling concerns

-

most web apps have the same architecture (ALB + ASG)

-

all the developers want is for their code to run

-

possibly, consistently across different applications and environments

Elastic Beanstalk - overview

- Elastic Beanstalk is a developer centric view of deploying an application on AWS

- it uses all the component’s we have seen before: EC2, ASG, ELB, RDS…

- managed service

- automatically handles capacity provisioning, load balancing, scaling, application health monitoring, instance configuration…

- just the application code is the responsiblity of the developer

- we still have full control over the configuration

- Beanstalk is free but you pay for the underlying instances

Elastic Beanstalk - components

- application

- collectioin of Elastic Beanstalk components (environments, versions, configurations…)

- application version

- an iteration of your application code

- environment

- collection of AWS resources running an application version (only one application version at a time)

- Tiers

- web server environment tier

- worker environment tier

- you can create multiple environments (dev, test, prod…)

S3

Buckets

- Amazon S3 allows people to store objects in buckets

- buckets must have a globally unique name

- buckets are defined at the region level

- naming convention

- No uppercase

- no underscore

- 3-63 characters long

- not an ip

- must start with lowercase letter or number

Objects

-

objects (files) have a key

-

the key is the FULL path

- s3:"//my-bucket/my_folder/another_folder/my_file.txt

- key is: my_folder/another_folder/my_file.txt

- prefix is: my_folder/another_folder/

- object name is: my_file.txt

-

there is no conecpt of directories within buckets

-

just keys with very long names that contain slashes

-

object values are the content of the body

- max object size is 5TB

- if uploading more than 5GB, must use multi-part upload

-

metadata (list of text key / value pairs, system or user metadata)

-

Tags (Unicode key / value pair - up to 10) - useful for security / lifecycle

-

Version ID (if versioning is enabled)

Versioning

- you can version your files in Amazon S3

- it is enabled at the bucket level

- same key overwrite will increment the version

- it is best pratice to version your buckets

- protect against unintended deletes (ability to restore a version)

- easy roll back to previous version

- notes

- any file that is not versioned prior to enabling versioning will have version

null - suspending versioning does not delete the previous versions

- any file that is not versioned prior to enabling versioning will have version

Encryption for objects

- SSE-S3: encrypts S3 object using keys handled and managed by AWS

- SSE-KMS: leverage AWS KMS service to manage encryption keys

- SSE-C: when you want to manage your own encryption keys

- client side encryption

- it is important to understand which ones are adapted to which situation for the exam

S3 Default Encryption

- one way to force encryption is to use a bucket policy and refuse any API call to PUT an S3 object without encryption headers

- another way is to use the default encryption option in S3

- note: bucket policies are evaluated before default encryption

- e.g. if you have a bucket policy to reject all un-encrypted files from being upload to S3, then you can’t upload un-encrypted file even if you have the default encryption enabled.

SSE-C

- Amazon S3 does not store the encryption key you provide

- HTTPS must be used (because you need to send the key in the request)

- Encryption key must provided in HTTP headers, for every request

Client side encryption

- client library such as the Amazon S3 Encryption client

- clients must encrypt the data themselves before sending to S3

- clients must decrypt the data themselves when retrieving the data from S3

- customer fully manages the keys and encryption cycle

Encryption in transit (SSL/TLS)

- Amazon S3 exposes

- HTTP endpoint, non encrypted

- HTTPS endpoint, encryption in flight

- You are free to use the endpoint you want, but HTTPS is recommended

- most clients would use the HTTPS endpoint by default

- HTTPS is mandatory for SSE-C (because you need to send the key in the request)

Security

-

User based

- IAM policies: which API calls should be allowed for a specific user from IAM console

-

Resource based

- bucket policies: bucket wide rules from the S3 console - allows cross account

- Object ACL: finer grain

- Bucket ACL: less common

-

Note: an IAM principal can access an S3 object if

- the user IAM permissions allow it OR the resource policy ALLOW it

- AND there is no explicit DENY

-

JSON based policies

- Resources: buckets and objects

- Actions: Set of API to Allow or Deny

- Effect: Allow / Deny

- Principal: the account or user to apply the policy to

-

use S3 bucket for policy to

- Grant public access to the bucket

- Force objects to be encrypted at upload

- Grant access to another account (cross account)

CORS

- An origin is a scheme, host, and port

- CORS means Cross-Origin Resource Sharing

- Web browser based machanism to allow requests to other origins while visiting the main origin

- the requests won’t be fulfilled unless the other origin allows for the requests, using CORS Headers (

Access-Control-Allow-Origin) - if a client does a cross-origin request on our S3 bucket, we need to enable the correct CORS headers

- you can allow for a specific origin or * for all origins

S3 Access logs

- for audit purpose, you may want to log all access to S3 buckets

- any request made to S3, from any account, authorized or denied, will be logged into another S3 bucket

- that data can be analyzed using data analysis tools later (Amazon Athena)

S3 Replication (Cross-Region or Same Region)

-

Must enable versioning in source and destination

-

cross region replication

-

same region replication

-

buckets can be in different accounts

-

copying is asynchronous

-

must give proper IAM permissions to S3

-

After activating, only new objects are replicated (existing objects will not be replicated)

-

For DELETE operations

- can replicate delete markers from source to target (optional setting)

- deletions with a version ID are not replicated (to avoid malicious deletes)

-

there is not chaining of replication

- if bucket one has replicatio into bucket two, which has replicatioin into bucket three

- then objects created in bucket one are not replicated to bucket three

S3 Pre-signed URL

- can generate pre-signed URLs using SDK or CLI

- valid for a default of 3600 seconds

- users given a pre-signed URL inherit the permissions of the person who generated the URL for GET / PUT

S3 Storage Class

Standard - General Purpose

- High durability of objects across multiple AZ

- 99.99% availability over a given year

- sustain 2 concurrent facility failure

- use case: big data analytics, mobile and gaming applications, content distribution…

Standard - IA

- suitable for data that is less frequently accessed, but requires rapid access when needed

- 99.9% availability

- low cost compared to GP

- use cases: as a data store for disater recovery, backups

One Zone - IA

- Same as IA but data is stored in a single AZ

- 99.5% availability

- low latency and high throughput performance

- supports SSL for data at transit and encryption at rest

- low cost compared to IA

- use cases: storing secondary backup copies of on-premise data, or storing data you can re-create

Intelligent Tiering

- same low latency and high throughput performance of S3 standard

- small monthly monitoring and auto-tiering fee

- automatically moves objects between two access tiers based on changing access patterns

- resilient against events that impact an entire AZ

Amazon Glacier

- low cost object storage meant for archiving / backup

- data is retained for the longer term (10+ years)

- alternative to on-premise magnetic tape storage

- cost per storage per month + retrieval cost

- each item in glacier is called archive

- archives are stored in Vaults

Amazon Glacier and Glacier Deep Archive

- Amazon Glacier - 3 retrieval options

- expedited (1 to 5 minues)

- standard (3 to 5 hours)

- bulk (5 to 12 hours)

- minimum storage duration of 90 days

- Amazon Glacier Deep Archive

- Standard (12 hours)

- Bulk (48 hours)

- Minimum storage duration of 180 days

S3 Lifecycle Rules

- Transition actions

- it defines when objects are transitioned to another storage class

- e.g. move objects to Standard IA class 60 days after creation

- e.g. move to Glacier for archiving after 6 months

- Expiration actions

- configure to expire (delete) after some time

- e.g. access log files can be set to delete after a 365 days

- e.g. can be used to delete old versions of files (if versioning is enabled)

- e.g. can be used to delete incomplete multi-part uploads

S3 Analytics - Storage Class Analysis

- You can setup S3 analytics to help determine when to transit objects from Standard to Standard IA

- does not work for One Zone - IA or Glacier

S3 Select and Glacier Select

- retrieve less data using SQL by performing server side filtering

- can filter by rows and columns

- less network transfer, less CPU cost client-side

S3 Event notifications

- can create as many events as desired

- SNS

- SQS

- Lambda function

- S3 event notifications typically deliver events in seconds but can sometimes take a minute or longer

- if two writes are made to a single non-versioned object at the same time, it is possible that only a single event notification will be sent

- if you want to ensure that an event notification is sent for every successful write, you can enable versioning on your bucket.

- Your S3 bucket needs to have permission to send message to SQS queue

S3 Requester Pays

- in general, bucket owners pay for all S3 storage and data transfer costs associated with their buckets

- with Requester Pays buckets, the requester instead of the bucket owner pays the cost of the request and the data download from the bucket

- helpful when you want to share large datasets with other accounts

- the requester must be authenticated in AWS (cannot be anonymous)

Glacier Vault Lock

- Adopt a WORM (Write Once Read Many) model

- lock the policy for future edits (can no longer be changed)

- helpful for compliance and data retention

S3 Object Lock (versioning must be enabled)

- adopt a WORM (Write Once Read Many) model

- block an object version deletion for a specified amount of time

- object retention

- retention period: specifies a fixed period

- legal hold: same protection, no expiry date

- Modes

- Governance mode: users can’t overwrite or delete an object version or alter its lock settings unless they have special permissions

- Compliance mode: a protected object version can’t be overwritten or deleted by any user, including the root user. When an object is locked in compliance mode, its retention mode can’t be changed and its retention period can’t be shortened.

AWS Athena

- serverless service to perform analytics directly against S3 files

- uses SQL language to query the files

- has a JDBC / ODBC driver (for BI tools)

- charged per query and amount of data scanned

- supports CSV, JSON, ORC, Avro, and Parquet (built on Presto)

- use cases: BI / Analytics / reporting / Logs / CloudTrail trails etc…

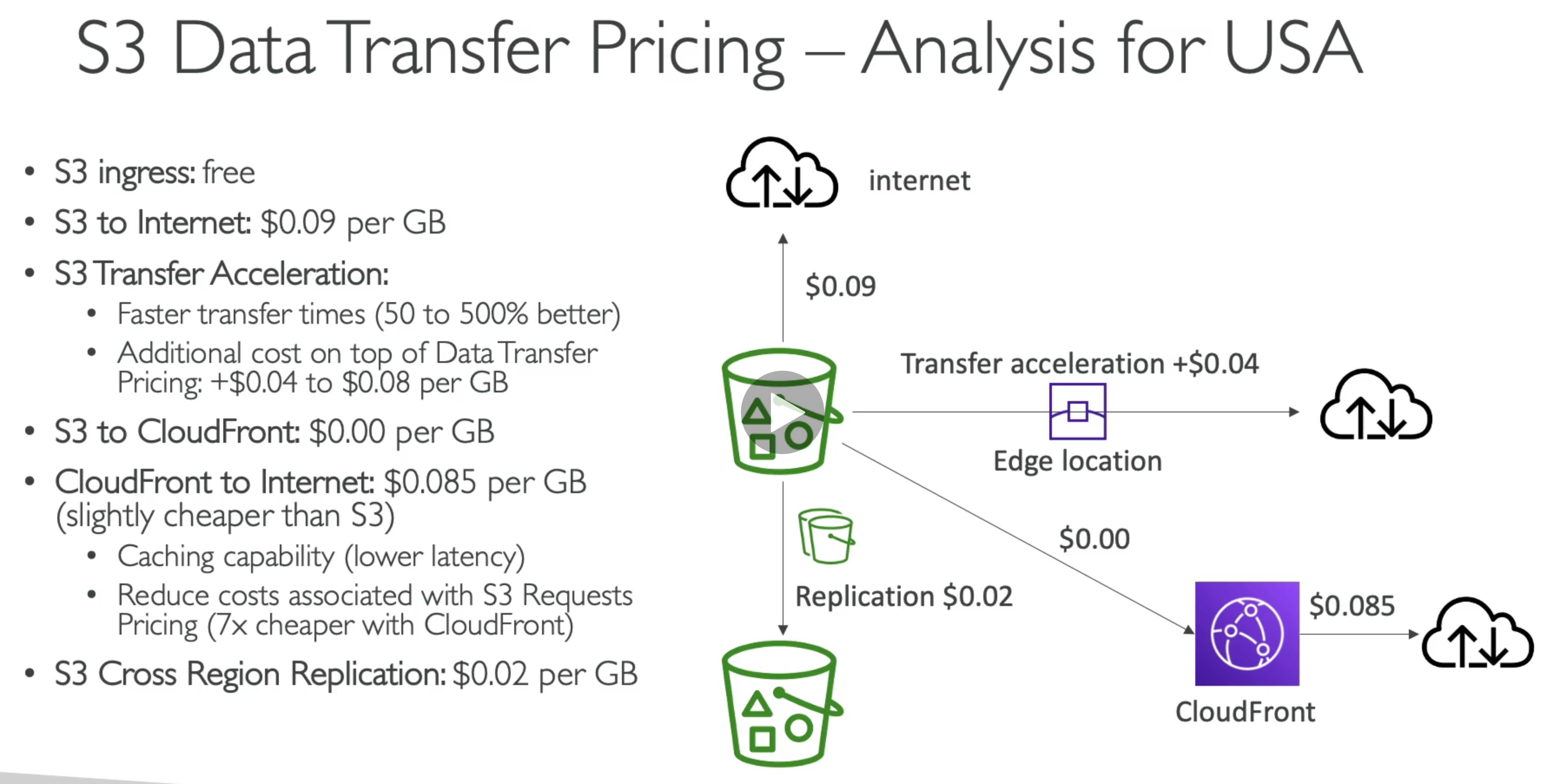

AWS CloudFront

- CDN

- improves read performance, content is cached at the edge loctions

- 216 point of presence globally (edge locations)

- DDoS protection, integration with Shield, AWS web application firewall

- can expose external HTTPS and can talk to internal HTTPS backends

Origins

- S3 bucket

- for distributing files and caching them at the edge

- enahnced security with CloudFront OAI (Origin Access Identity), this can block access directly to S3

- CloudFront can be used as an ingress (to upload files to S3)

- Custom Origin (HTTP)

- Application Load Balancer

- EC2 instance

- S3 Website (must first enable the bucket as a static S3 website)

- Any HTTP backend you want

CloudFront vs S3 Cross Region Replication

- CloudFront

- Global Edge Network

- files are cached for a TTL

- great for static content that must be available everywhere, maybe outdated for a while

- S3 Cross Region Replication

- must be setup for each region you want replication to happen

- files are updated in near real time

- read only

- great for dynamic content that needs to be available at low latency in few regions

CloudFront Signed URL / Signed Cookies

- you want to distribute paid share content to premium users over the world

- we can use CloudFront Signed URL / Cookie, we attach a policy with

- includes URL expiration

- includes IP ranges to access the data from

- Trusted Signers (which AWS accounts can create signed URLs)

- Signed URL: access to individual files (one signed URL per file)

- Signed Cookies: access to multiple files (one signed Cookie for many files)

Process

- user authenticate and authorized to the application

- the application send request to CloudFront to generate Signed URL / Cookie

- the application send the signed URL / Cookie back to user

- user use the signed URL / Cookie to access the file in CloudFront

- CloudFront fetch the file from S3 to the user

CloudFront Signed URL vs S3 Pre-signed URL

- CloudFront Signed URL

- Allow access to a path, no matter the origin

- account wide key pair, only the root can manage it

- can filter by IP, path, date, expiration

- can leverage caching features

- S3 Pre-signed URL

- issue a request as the person who pre-signed the URL

- uses the IAM key of the signing IAM principal

- limited lifetime

CloudFront - Price Class

- You can reduce the number of edge locations for cost reduction

- 3 price classes

- price class all: all regions

- price class 200: most regions, but excludes the most expensive regions

- price class 100: only the least expensive regions

CloudFront - Multiple Origins

- to route to different kind of origins based on the content type

- e.g. one origin is from ALB and another origin is from S3 bucket

Based on the path pattern

/images/*/api/*/*

CloudFront - Origin Groups

- to increase high availability and do failover

- Origin Groups: one primary and one secondary origin

- if the primary origin fails, the second one is used (CloudFront will send the same request to the secondary origin)

CloudFront - Field Level Encryption

- protect user sensitive information through application stack

- adds an additional layer of security along with HTTPS

- sensitive information encrypted at the edge close to the user

- uses asymmetric encryption (public private key pair)

- usage

- client send sensitive information to the edge location

- edge location use public key to encrypt the information

- edge location send encrypted information to CloudFront

- CloudFront send information all the way to (CloudFront => ALB => Web Servers) Web server

- Web server uses the private key to decrypt the information

AWS Global Accelerator

Global users for our application

- you have deployed an application and have global users who want to access it directly

- they go over the public internet, which can add a lot of latency due to many hops

- we wish to go as fast as possible through AWS network to minimize latency

Unicast IP vs Anycast IP

- Unicast IP

- one server holds one IP address

- Anycast IP

- all servers hold the same IP address and the client is routed to the nearest one

AWS Global Accelerator

- leverage the AWS internal network to route to your application

- 2 Anycast IP are created for your application

- the Anycast IP send traffic directly to Edge locations

- the edge locations send the traffic to your application (through AWS private network)

Global Accelerator vs CloudFront

-

they both use the AWS global network and its edge locations around the world

-

both services integrate with AWS shield for DDoS protection

-

CloutFront

- improves performance for both cacheable content (images / videos)

- Dynamic content (such as API acceleration and dynamic site delivery)

- content is served at the edge location

-

Global Accelerator

- improves performance for a wide range of applications over TCP or UDP

- proxying packets at the edge to applications running in one or more AWS regions

- good fit for non-HTTP use cases: such as gaming (UDP), IoT (MQTT) or Voice Over IP

- good for HTTP use cases that require static IP (if use Route 53 Geo location, client browser will cache the IP address and redirect user to the old IP for a TTL)

- good for HTTP use cases that require deterministic, fast regional failover

AWS Snow Family

- Highly-secure, portable devices to collect and process data at the edge, and migrate data into and out of AWS

Snowball Edge (for data transfers)

- physical data transport solution

- alternative to moving data over the network

- pay per data transfer job

- provide block storage and Amazon S3 compatible object storage

- Snowball Edge Storage Optimzied

- Snowball Edge Compute Optimized

Snowcore

- small, portable computing, anywhere, rugged and secure, withstands harsh environements

- light, 2.1 kg

- device used for edge computing, storage, and data transfer

- 8 TB usable storage

- must provide your own battery and cables

- can be sent back to AWS offline, or connect it to internet and use AWS datasync to send data

Snowmobile

- transfer exabytes of data (1 EB = 1000 PB = 1,000,000 TB)

- each snowmobile has 100 PB of capacity

- high security

- better than snowball if you transfer more then 10 PB

Edge computing

- process data while its being created on an edge location

- A truck on the road, a ship on the sea, a mining station underground (no internet access)

- these locations may have

- limited / no internet access

- limited / no easy access to computing power

- we setup a snowball / snowcone device to do edge computing

- eventually we can ship back the device to AWS

AWS OpsHub

- Historically, to use Snow Family devices, you need a CLI

- today, you can use AWS OpsHub (a software you install on your computer / laptop) to manage your snow family devices

- unlocking and configuring single or clustered devices

- transferring files

- launching and managing instances running on Snow family devices

- monitor device metrics (storage capacity, active instances)

- launch compatible AWS services on your devices

Snowball into Glacier

- Snowball cannot import to Glacier directly

- you must use Amazon S3 first, in combination with an S3 lifecycle policy

AWS Storage Gateway

- Bridge between on-premises data and cloud data in S3

- use cases: disaster recovery, backup and restore, tiered storage

File Gateway

- configured S3 buckets are accessible using the NFS and SMB protocol

- supports S3 standard, S3 IA, S3 One Zone IA

- bucket access using IAM roles for each File Gateway

- most recently used ata is cached in the file Gateway

- can be mounted on many servers

- integrated with AD (Active Directory) for user authentication

- On-premises server communicate with File Gateway (optionally with Authentication)

- File Gateway communicate with S3 buckets

Volume Gateway

- block storage using iSCSI protocol backed by S3

- backed by EBS snapshot which can help restore on-premises volumes

- cached volumes: low latency access to most recent data

- stored volumes: entire dataset is on premises, scheduled backups to S3

- On-premises server communicate with Volume Gateway using iSCSI protocol

- Volume Gateway communicate with S3 bucket to create EBS snapshots

- Volume Gateway is often used as data backup

Tape Gateway

- some companies have backup processes using physical tapes

- with tape gateway, companies use the same processes but, in the cloud

- Virtual Tape Library (VTL) backed by Amazon S3 and Glacier

- backup data using existing tape-based processes

Storage Gateway - Hardware appliance

- if you don’t have on-premises virtual server, you can buy from Amazon

AWS FSx

AWS FSx for Windows

- EFS is a shared POSIX system for Linux system

- FSx is a fully managed Windows file system share drive

- supports SMB protocol and Windows NTFS

- Microsoft Active Directory integration, ACLs, user quotas

- can be accessed from your on-premises infrastructure

- can be configured to be Multi-AZ

- data is backed up daily to S3

Amazon FSx for Lustre

- Lustre is a type of parallel distributed file system, for large scale computing

- the name Lustre is derived from Linux and Cluster

- Machine Learning, High Performance Computing

- Video processing, Financial Modeling and Electronic Design Automation

- Seamless integration with S3

- can be used from on-premises servers

FSx File System Deployment Options

- Scratch file system

- temporary storage

- data is not replicated

- high burst

- usage: short-term processing, optimize costs

- Persistent File System

- long-term storage

- data is replicated within same AZ

- replace failed files within minutes

- usage: long-term processing, sensitive data

AWS Transfer Family

- a fully managed service for file transfers into and out of Amazon S3 or Amazon EFS using the FTP protocol

- supported protocols

- AWS Transfer for FTP (File Transfer Protocol)

- AWS Transfer for FTPS (File Transfer Protocol over SSL)

- AWS Transfer for SFTP (Secure File Transfer Protocol)

- Managed infrastructure, scalable, reliable, highly available

- pay per provisioned endpoint per hour + data transfers in GB

- store and manage users’ credentials within the services

- integrate with existing authentication systems (Microsoft Active Directory, LDAP, Okta…)

AWS Storage Comparison

- S3: Object Storage

- S3 is going to be an object storage, it’s going to be serverless, you don’t have to prove incapacity ahead of time. It has some deep integration with so many database services.

- Glacier: Object Archival

- Glacier is going to be for object archival. So this is when we want to store objects for a long period of time. Retrieve it very very rarely, and when we retrieve these objects, they’re going to be taking a lot of time to get back to us because they are archived.

- EFS: Network File System for Linux instances, POSIX file system

- EFS is Elastic File System, and this is a network file system for Linux instances. It is a POSIX file system so that means for Linux again. And it is accessible from all your EC2 instances at once. So it is something that is going to be shared and across AZ.

- FSx for Windows: Network File System for Windows servers

- FSx for Windows is the same thing as EFS, but for Windows. So it’s a network file system for your Windows servers.

- FSx for Lustre: High performance computing Linux file system

- FSx for Lustre is Linux and cluster, so it’s for High Performance Computing Linux file system. This is where you’re going to do your HPC running. You only have insanely high IOPS, insanely big capacity. And it has integration with S3 in the back end.

- EBS volume: network storage for one EC2 instance at a time

- EBS volumes is your network storage for one EC2 instance at a time only. And it is bound to a specific availability zone that you create it in. And in case you wanted to change the AZ, you will need to create a snapshot, move that snapshot over, and create a volume from it.

- Instance Storage: physical storage for your EC2 instance (high IOPS)

- Instance Storage is going to be physical storage for your EC2 instance. And so, because it’s attached from the hardware, then it’s going to have a much higher IOPS than EBS. EBS volumes, as we remember, it is up to 16,000 IOPS or 64,000 IOPS for io1. But for Instance Storage, because it is physically attached to your EC2 instance, you can get, for some, millions of IOPS. Um, it’s going to be very high. But the risk is that if your EC2 instance goes down, then you will lose that storage permanently.

- Storage Gateway: file gateway, volume gateway, tape gateway

- Storage Gateway is going to be transporting files from on premise to AWS. So we have File Gateway, Volume Gateway for cache and stored, and Tape Gateway. Each with their use cases.

- Snowball / Snowmobile: to move large amount of data to the cloud, physically

- And then finally, Snowball/Snowmobile to move large amount of data to the cloud physically into S3.

- database: for specific workloads, usually with indexing and querying

Amazon SQS (Simple Queuing Service)

- Fully managed service, used to decouple applications

- attributes

- unlimited throughput, unlimited number of messages in queue

- default retention of messages: 4 days, to maximum 14 days

- low latency

- limitation of 256KB per message sent

- can have duplicate messages (at least once delivery, occasionally)

- can have out of order message (best effort ordering)

Producing Messages

- Produced to SQS using the SDK (SendMessage API)

- the message is persisted in SQS until a concumer deletes it

Consuming Messages

- consumers (running on EC2 instances, servers, or AWS lambda)

- Poll SQS for messages (receive up to 10 message at a time)

- process the messages (example: insert the message into an RDS database)

- delete the messages using the DeleteMessage API

Multiple EC2 instances consumers

- consumers receive and process messages in parallel

- at least once delivery (another consumer will receive the message if the first consumer didn’t process it fast enough)

- best-effort message ordering

- consumers delete messages after processing them

- we can scale consumers horizontally to improve throughput of processing

SQS with Auto Scaling Group

- CloudWatch is monitoring the SQS length

- if SQS length is too long, CloudWatch will trigger an alarm

- Auto Scaling group will increase the number of EC2 instances if the alarm is triggered

SQS Security

- encryption

- in flight encryption using HTTPS API

- at rest encryption using KMS keys

- client side encryption if the client wants to perform encryption / decryption itself

- Access control: IAM policies to regulate access to the SQS API

- SQS access policies (similar to S3 bucket policies)

SQS Queue Access Policy

- Cross Account Access

- if other accounts want to poll message from the SQS queue, we could add policy to the SQS specify which account and allow it to call receiveMessage API

- publish S3 event notifications to SQS queue

- we upload an object to a S3 bucket

- S3 bucket triggered an event message to be sent to SQS queue

- we need to add a policy to SQS queue allowing the bucket to call SendMessage API to the queue

SQS - Message Visibility Timeout

-

after a message is polled by a consumer, it becomes invisible to other consumers

-

by default, the message visibility timeout is 30 seconds

-

that means the message has 30 seconds to be processed and deleted from the queue

-

after the message visibility timeout is over, the message is visible in SQS for other consumers to receive

-

if a message is not processed within the visiblity timeout, it will be processed again by other consumers

-

a consumer could call the ChangeMessageVisibility API to get more time

-

if visibility timeout is high (hours), and consumer crashes, re-processing will take time

-

if visibility timeout is too low (seconds), we may get duplicates

SQS - Dead Letter Queue

- if a consumer fails to process a message within the visibility timeout, the message goes back to the queue

- we can set a threshold of how many times a message can go back to the queue

- after the MaximumReceives threshold is exceeded, the message goes into a dead letter queue (DLQ)

- useful for debugging

- make sure to process the messages in the DLQ before they expire

- good to set a retention of 14 days in the DLQ

SQS - Request - Response Systems

- producer send request with the reply-to queue ID to the Request queue

- responders receive the request from the Request queue and process it

- after processing, responders send the response to the corrent Response queue using the queue ID in the request

- to implement this pattern: use the SQS Temporary Queue Client

- it leverages virtual queues instead of creating / deleting SQS queues (cost effective)

SQS - Delay Queue

- delay a message (consumers don’t see it immediately) up to 15 minutes

- default is 0 seconds (message is avaialble right away)

- can set a default at queue level

- can override the default on send using the DelaySeconds parameter

SQS - FIFO queue

- Frist In First Out

- limited throughput

- exactly once send capability (by removing duplicates), the message that failed to be processed will be insert at the end of the queue

- messages are processed in order by the consumer

Amazon SNS

- the event producer only sends message to one SNS topic

- as many event receivers (subscriptions) as we want to listen to the SNS topic notifications

- each subscriber to the topic will get all the messages (note: new feature to filter messages)

- subscribers can be

- SQS

- HTTP / HTTPS

- lambda

- Emails

- SMS messages

- Mobile notifications

SNS integrates with a lot of AWS services

- many AWS services can send data directly to SNS for notifications

- CloudWatch (for alarms)

- Auto Scaling Groups notifications

- Amazon S3 (on bucket events)

- CloudFormation (upon state changes => failed to build etc…)

- etc…

How to publish

- topic publish (using SDK)

- create a topic